Matteo Stefanini, Marcella Cornia, Lorenzo Baraldi, Massimiliano Corsini, Rita Cucchiara

Proceeding of the International Conference on Image Analysis and Processing (ICIAP 2019)

June 2019

Abstract

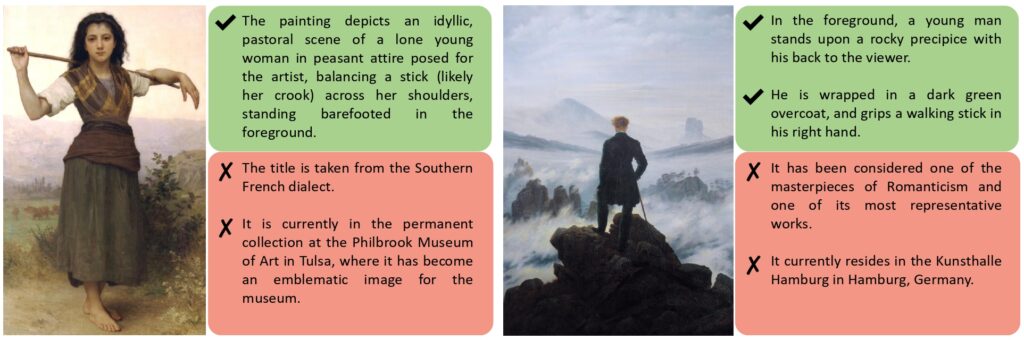

As vision and language techniques are widely applied to realistic images, there is a growing interest in designing visual-semantic models suitable for more complex and challenging scenarios. In this paper, we address the problem of cross-modal retrieval of images and sentences coming from the artistic domain. To this aim, we collect and manually annotate the Artpedia dataset that contains paintings and textual sentences describing both the visual content of the paintings and other contextual information. Thus, the problem is not only to match images and sentences, but also to identify which sentences actually describe the visual content of a given image. To this end, we devise a visual-semantic model that jointly addresses these two challenges by exploiting the latent alignment between visual and textual chunks. Experimental evaluations, obtained by comparing our model to different baselines, demonstrate the effectiveness of our solution and highlight the challenges of the proposed dataset.

Type: Conference Paper

Publication: International Conference on Image Analysis and Processing (ICIAP 2019)

Dataset: artpedia.zip

Full Paper: link pdf

Please cite with the following BibTeX:

@inproceedings{stefanini2019artpedia,

title={{Artpedia: A New Visual-Semantic Dataset with Visual and Contextual Sentences}},

author={Stefanini, Matteo and Cornia, Marcella and Baraldi, Lorenzo and Corsini, Massimiliano and Cucchiara, Rita},

booktitle={Proceedings of the International Conference on Image Analysis and Processing},

year={2019}

}